VR Development Is Going To Be Supported In Unity And Unreal

Making VR games is already a thing and people are enjoying the heck out of it, even though consumer VR headsets aren't available just yet. Well, the next step in virtual reality advancements comes in the form of VR development, where designers can design games while in virtual reality.

Shacknews is reporting that both Unity Technologies and Epic Games will be supporting game and project development within virtual reality. Epic Games was quick to showcase exactly how this technology will work with a demonstration video of what level designing might be like with the HTC Vive and Oculus Rift VR controllers. Check it out below.

The development environment could change drastically with the ability to design games in real-time within a virtual environment. Epic Games' Tim Sweeney and Mike Fricker walk through the benefits of designing games in VR.

The Oculus' wireless controllers are used as pointer devices to select objects, pick them up, move them around and manipulate them to fit the need of the designer. Fricker gives game developers a look at how this kind of technology could be used to design levels or construct complex structures within a 3D environment.

As you can see in the video, though, the precision on the motion controllers wasn't always accurate to what Fricker was doing in real life. Some developers dropped into the comment section on the YouTube page to express some concern with whether or not this is an efficient alternative to designing games using a keyboard and mouse.

Some of you might know that level designers and character artists can spend all day sitting at a desk working on a character or level, and in the comment section they rightly point out that standing up and waving one's arms around in the Unreal Engine or Unity could get exhausting real quick. While it's a novel design it definitely calls into question the practicality and efficiency of using a method like this to actually construct a game.

As cool as it would be to construct a working prototype in the Unreal Engine or Unity, there's still the reality that it's just faster dragging and dropping things with a mouse or a few world values typed out on a keyboard's numerical pad.

CINEMABLEND NEWSLETTER

Your Daily Blend of Entertainment News

The sad reality is that while I think this is a cool design opportunity to mix productivity with entertainment, I can't actually think of a case where building anything in the Unreal or Unity engine would be faster using wireless controllers as opposed to just using a keyboard or mouse.

Object scripting would still require programmers to type out the commands, something that wouldn't really change in VR. Modeling characters and levels wouldn't really change too much unless the precision of the wireless controllers could mimic a person using their hands to mold clay. Although, to be fair, out of each application I think character modeling in something like Zbrush would likely have the most efficient application. Creating custom textures on walls, such as graffiti could be cool, but there's no way that manually painting walls would be faster than just selecting a wall and having a repeating texture pattern appear on that wall.

I suppose we'll have to wait and see exactly how this is refined and utilized for development before making any final judgments. Nevertheless, the Unreal Engine 4 version of building games using VR is coming soon and will be demonstrated at GDC next month. Unity has yet to announce when the tools will be available but keep an eye out for any further announcements.

Staff Writer at CinemaBlend.

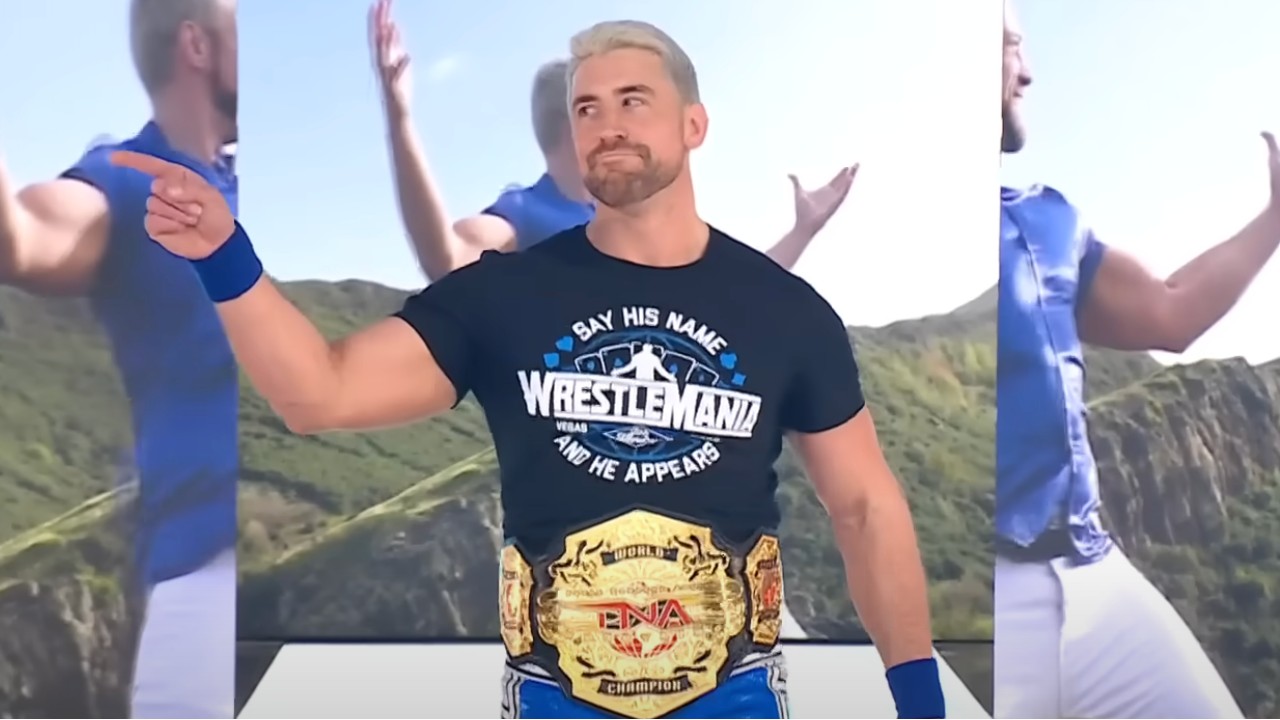

Joe Hendry Reveals How The WWE Snuck Him Into WrestleMania 41 For Randy Orton Match, And Confirms How Seriously They Take Surprise Appearances

Greg's Mom Seemed Like Another Nightmare 90 Day Fiancê Mother-In-Law, But She Changed My Mind

The Last Of Us Season 2 Episode 3 Live Blog: I'm Talking The Aftermath Of Joel's Tragedy, Ellie's Recovery And More